Optimizing the Efficacy of Learning Objectives through Pretests

Abstract

Learning objectives (LOs) are statements that typically precede a study session and describe the knowledge students should obtain by the end of the session. Despite their widespread use, limited research has investigated the effect of LOs on learning. In three laboratory experiments, we examined the extent to which LOs improve retention of information. Participants in each experiment read five passages on a neuroscience topic and took a final test that measured how well they retained the information. Presenting LOs before each corresponding passage increased performance on the final test compared with not presenting LOs (experiment 1). Actively presenting LOs increased their pedagogical value: Performance on the final test was highest when participants answered multiple-choice pretest questions compared with when they read traditional LO statements or statements that included target facts (experiment 2). Interestingly, when feedback was provided on pretest responses, performance on the final test decreased, regardless of whether the pretest format was multiple choice or short answer (experiment 3). Together, these findings suggest that, compared with the passive presentation of LO statements, pretesting (especially without feedback) is a more active method that optimizes learning.

INTRODUCTION

By the end of this paper, readers should be able to: 1) examine the effectiveness of learning objectives (LOs) on retention of expository text, 2) evaluate a method to present LOs that optimizes learning, and 3) explain why this method is better than simply providing LOs in the form of traditional statements.

LOs like the ones above, are a pedagogical tool that has been used by instructors for decades, in a variety of educational contexts, primarily to evaluate student outcomes (Cleghorn and Levin, 1973; Hartley and Davies, 1976; Bloch and Bürgi, 2002; Blanco et al., 2014; Lacireno-Paquet et al., 2014). Each year, secondary and postsecondary institutions devote resources, labor, and time to develop websites, offer workshops, and provide consultations on creating and assessing LOs for programs and courses (e.g., Guilbert, 1981; Prideaux, 2000; MacFarlane and Brumwell, 2016). However, most of these guidelines are unsupported by empirical research. For example, while Stiggins et al. (2004) suggest that posting LOs improves academic achievement, they provide no evidence of this improvement. To date, very few studies have systematically evaluated the effect of LOs on student learning. Moreover, researchers tend to use various terminologies, such as “learning objectives,” “learning outcomes,” “behavioral objectives,” and “instructional objectives” when discussing this phenomenon (these terms are often used interchangeably; Hartley and Davies, 1976; Allan, 1996; Harden, 2002; Hussey and Smith, 2008; Adams, 2015). Although there is no clear consensus on a specific terminology, Harden (2002) makes a functional distinction between instructional objectives, which are used to prepare and organize lectures (instructor focused), and learning objectives, which are used to help students guide their learning (student focused).

In this paper, we define LOs as knowledge about specific information that students should learn by the end of a study session (Hartley and Davies, 1976). For example, in an introductory biology lecture, one LO might be: “By the end of this lecture, students should be able to describe the difference between prokaryotic and eukaryotic cells.” The extent to which students achieve this LO can be assessed on a subsequent test or assignment.

Scholars have proposed several explanations for how LOs can benefit learning. LOs guide instruction and direct student attention to key information (Rothkopf and Billington, 1979; Wang et al., 2013); facilitate self-regulated learning practices, such as organizing notes and monitoring learning progress (Levine et al., 2008; Reed, 2012; Osueke et al., 2018); increase student engagement (Armbruster et al., 2009; Reynolds and Kearns, 2017) and confidence in course content (Wang et al., 2013; Winkelmes et al., 2016); decrease ambiguity in test expectations (Wang et al., 2013); and foster a connection between course content and its usefulness (Simon and Taylor, 2009; Reed, 2012). In a study by Osueke et al. (2018), students in a molecular biology course were asked how they would use LOs to study for an upcoming exam; almost half of the them (˜47.4%) converted LOs into practice questions that they answered, and the rest employed LOs as a tool to guide their studying.

In summary, the existing literature suggests that students may use and benefit from LOs in several ways, but does this translate into learning gains? Prior studies examining LOs have included confounds that make it difficult to determine whether LOs improve learning (e.g., Dalis, 1970; Johnson and Sherman,1975; Raghubir, 1979). For example, Armbruster et al. (2009) revised a biology course by incorporating several active-learning strategies, including labeling all test questions with the corresponding LOs. Although the course revision led to an increase in academic performance, it is unclear whether this improvement was due to the implementation of LOs or other active-learning strategies or both.

CURRENT STUDY

Teachers devote time and resources to incorporate LOs into educational practice, and students believe that this enhances their learning (e.g., Reed, 2012). However, such practices have developed in the absence of systematic research, and both educators and students can make inaccurate judgments of learning (e.g., Hartwig and Dunlosky, 2012; Yan et al., 2014). The theoretical contribution of the present research is to advance our understanding of the factors that make LOs more effective in the context of reading academic passages.

Experiment 1 examined whether presenting LOs before reading academic passages enhanced learning compared with not presenting the LOs. At the very least, LOs should benefit text-based learning by directing students’ attention to important information (Rothkopf and Billington, 1979). Experiment 1 also assessed whether LOs should be provided at the beginning of the passage (standard practice) or interpolated throughout the passage, such that they are presented immediately before the related content.

Experiment 2 examined the impact of a more active method to deliver LOs in the form of pretests. Pretests are composed of questions about the to-be-learned material and are often used to measure prior knowledge; however, a growing literature demonstrates that pretests can also enhance subsequent learning (e.g., Rickards, 1976; Hamaker, 1986; Richland et al., 2009). Pretests and LOs may similarly direct attention to important, potentially testable information in the subsequent lecture or text (Rothkopf and Billington, 1979). However, unlike LOs, pretests may have the added benefit of informing students about types of test questions to expect and could encourage more active-learning behaviors. In other words, pretesting could facilitate a form of metacognitive scaffolding (Osman and Hannafin, 1994). For example, incorrect attempts at answering pretest questions could foster the awareness of knowledge gaps and curiosity to seek self-feedback in subsequent reading. In experiment 2, we examined whether learning increased when presenting LOs as multiple-choice (MC) pretest questions compared with presenting LOs passively as traditional statements.

Experiment 3 examined factors that may further the learning gains of actively presenting LOs through pretesting. Specifically, we examined pretest question format (short answer [SA] or MC) and inclusion of immediate corrective feedback. If a critical benefit of pretesting is to encourage an active search for self-feedback during subsequent study sessions, then providing explicit feedback immediately following the pretest may actually reduce the learning gains.

EXPERIMENT 1

The goal of experiment 1 was to: 1) examine whether LOs increase student learning relative to no LOs and 2) test whether interpolating LOs during study results in better retention. In a standard approach, LOs are traditionally massed together and presented before a learning session. However, course material is typically grouped by modules, units, or themes. The interpolated presentation of LOs may be more beneficial than massing for several reasons. First, students are often expected to process and retain large volumes of information in a single learning session. This overwhelming experience could potentially increase cognitive load and make it difficult to attentively select important information. Providing LOs at the beginning of the lesson can direct attention toward those particular areas (Rothkopf and Billington, 1979). Second, interpolating LOs may further reduce cognitive load by directing attention at smaller chunks of to-be-learned material, which may facilitate better association and integration.

Method

Participants and Design.

One-hundred-and-sixty-four undergraduate students (M age = 18.54, SD = 1.72) from McMaster University enrolled in Introductory Psychology participated in the experiment in exchange for course credit. All participants provided informed consent. A final question at the end of the experiment asked participants to state whether they had ever been taught any of the target information, which confirmed all were unfamiliar with the content. All experiments reported here were approved by the McMaster Ethics Board.

Participants studied five passages and then completed a final test that measured comprehension. Participants were randomly assigned to one of three between-subject conditions: no LOs were presented (control; N = 56; 14 males); all LOs were presented at the beginning before the first passage (LOs massed; N = 53; 10 males); and the corresponding LOs were presented before each passage (LOs interpolated; N = 55; 12 males). A power analysis was conducted using G*Power software (Faul et al., 2007) based on a one-way analysis of variance (ANOVA) with three groups. Assuming a medium effect size of f = 0.25, a minimum of 159 participants was required to have an 80% power (α = 0.05). This analysis was used to calculate the number of participants recruited in experiments 1 and 2. We did not have access to participants’ cognitive aptitude (e.g., working memory capacity) or academic abilities (e.g., grade point average) that could ensure homogeneity across the different conditions on those measures. However, our data-collection protocol in all three experiments included a sequential random assignment to conditions, which minimized any variation that could have resulted from factors such as the time of day or week of data collection.

Materials.

The materials consisted of five, modified, passages on the topic of mirror neurons drawn from a neuroscience textbook (Squire et al., 2012). Each passage, which consisted of a single paragraph ranging from 171 to 267 words, focused on a central theme—Passage 1: What are mirror neurons? Passage 2: What are the different brain areas involved in mirror neurons? Passage 3: What are the neurophysiological techniques used to measure mirror neurons? Passage 4: What is the functional role of mirror neurons? Passage 5: What recent evidence exists to advance our understanding of the role of mirror neurons?

Participants were exposed to three LO statements per passage, for a total of 15 LO statements over the five passages. These statements were based on key facts covered in each passage and did not include the correct response. For example, in passage 1, participants learned that mirror neurons are located in the ventral premotor cortex. The corresponding LO was “In the first passage, you will learn about where the mirror neurons are located.” The answers to all LOs were explicitly stated in the passages. The final test consisted of 15 applied MC questions. Each question corresponded to an LO fact, and therefore targeted the same content. According to the Blooming Biology Tool (Crowe et al., 2008), the LOs in the current study targeted basic, but necessary knowledge (e.g., memorization and recall of facts), whereas the final test questions assessed the application of that knowledge (i.e., conceptual understanding). See Table 1 for a sample of the materials used in all experiments; the top section (“LO statement”) and bottom section (“Final test question”) pertain to experiment 1.

| Question type | Example |

|---|---|

| LO statement | In the first passage you will learn where the mouth movements are located in the frontal lobe. |

| Multiple-choice pretest question |

In the frontal lobe, where are the mouth movements located?

|

| Statement with answer | In the frontal lobe, the mouth movements are located in the posterior region of the inferior frontal gyrus. |

| Short-answer pretest question | In the frontal lobe, where are the mouth movements located? |

| Final test question |

If Lindsay damages the posterior region of her inferior frontal gyrus, which of the following actions can she not perform?

|

Procedure.

Data were collected using LimeSurvey. The experiment took 1 hour to complete and consisted of two phases: the study phase and the final test phase. In the study phase, participants were told that they would read passages on a neuroscience topic and then complete a final test on how well they learned the content. Participants were also told that they might be asked to engage in additional study activities before any given passage. These activities would involve reading LOs, which were described to the participants as simple statements that would prepare them for the to-be-studied content. Participants were encouraged to study these statements carefully and look for that information in the passages, because it would help them on the final test.1 The specific instructions related to the study activities were: “You may engage in study activities before a passage. In one activity, you may be presented with clear learning objectives—these are statements that articulate the important information you are expected to learn by the end of the session. Read the statements carefully, use them to guide your study, and look for the information in the passages. Doing so will help you on the final test.”

During the study phase, each passage was presented one at a time for 5 minutes. In the control condition (no LOs), participants studied the five passages without any LOs presented. The LOs-massed condition was identical to the control condition, with the exception that all 15 LOs were presented, one at a time, at the beginning, before the presentation of the first passage. Participants read each LO statement at their own pace and clicked a button on the screen to proceed to the next LO. In the LOs-interpolated condition, the 15 LOs were presented corresponding to the passage during the study phase. Specifically, three corresponding LO statements were presented, one at a time, immediately before the presentation of the corresponding passage.

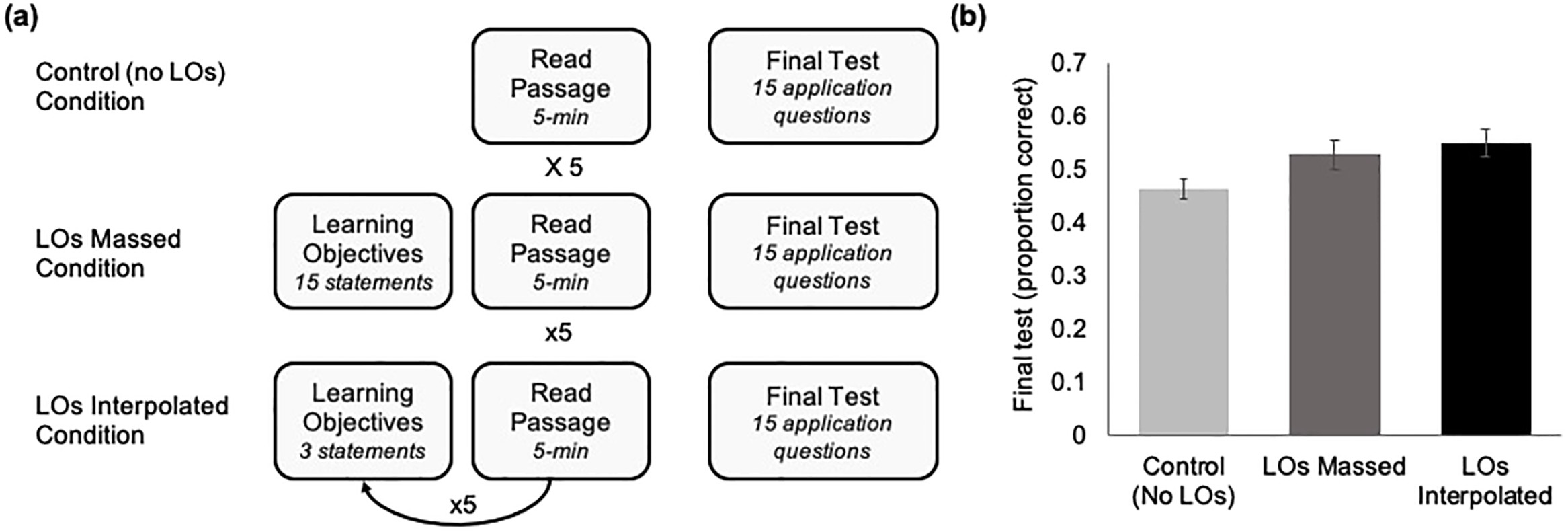

After the study phase, participants engaged in a 5-minute distractor task, in which they were asked to list animals and countries that start with each letter of the alphabet. The final test phase consisted of 15 randomly ordered four-option MC questions. These questions were mandatory, self-paced, and tested the same key facts covered in the LO statements. Participants did not have access to the passages while they were answering the test questions. Once they completed the test they were debriefed and dismissed. See Figure 1a for an overview of the procedure.

FIGURE 1. General procedure (a) and results (b) of experiment 1, which examines performance on a final comprehension test as a function of two learning objectives (LOs) conditions and a control condition. Error bars represent SEM.

Results and Discussion

We examined whether performance on the final test differed depending on the three conditions (see Figure 1b). An ANOVA showed that the effect of condition was significant, F(2, 161) = 3.28, MSE = 0.03, p = 0.040, ηp2 = 0.04. Pairwise comparisons, adjusted for multiple comparisons using the Bonferroni method, indicated that the average performance on the final test was significantly lower in the control condition (M = 0.47, SD = 0.15) compared with the LOs-interpolated condition (M = 0.55, SD = 0.20), t(109) = −2.58, p = 0.044, d = 0.49, and nonsignificantly lower in the control condition compared with the LOs-massed condition (M = 0.53, SD = 0.20), t(107) = −1.91, p = 0.21. However, average performance on the final test was similar between the LOs massed condition and the LOs interpolated condition, t(106) = −0.57, p = 0.999.

In summary, the results suggest that presenting LOs during study increased learning of academic passages compared with not presenting LOs. While the pattern of data was consistent with the possibility that interpolated LOs may be particularly beneficial (mean test performance was highest in that condition), they did not result in statistically better test performance than massed LOs.

EXPERIMENT 2

In experiment 1, we found that interpolating LOs across study improved learning compared with providing no LOs at all. However, interpolating LOs across study did not significantly benefit learning beyond massing LOs before study. In experiment 2, we took a different approach in our attempt to bolster the effectiveness of LOs: We harnessed the robust educational benefit of pretesting (Pressley et al., 1990; Richland et al., 2009; Little and Bjork, 2016) by converting LOs to MC questions that were presented as a pretest before study.

Pretests—taking tests before target information is presented—have the potential to significantly enhance the retention of to-be-remembered information (e.g., Peeck, 1970; Hamaker, 1986; Little and Bjork, 2016). In typical pretesting studies, all participants read a passage on novel content, but some participants first answer a series of questions (i.e., a pretest) on the content before reading the passage. On a final test, which could be immediate or delayed, all participants answer questions that assess their memory and understanding of the pretested content. The typical finding is that pretested information is learned better than non-pretested information. This learning benefit has been recently shown with materials of various complexities (e.g., paired associates: Kornell et al., 2009; vocabulary: Potts and Shanks, 2014; reading passages: Richland et al., 2009; video lectures: Carpenter and Toftness, 2017).

Although there are limited data to directly compare the learning benefits of LOs and pretests (Beckman, 2008), the issue has raised questions on why pretesting may be a potentially powerful method to present LOs. Pretests, like LOs, direct attention to what the teacher likely considers to be important (Bull and Dizney, 1973; Hartley, 1973; Hamilton, 1985; Hamaker, 1986). Pretests and LOs thus orient students to potentially testable information in the subsequent lecture or text. A typical outcome of pretests is unsuccessful retrieval attempts, given that students are not familiar with the material being tested. However, the very act of trying to generate an answer seems to activate relevant prior knowledge, and this leads to more elaborate encoding of subsequently learned information (e.g., Carpenter, 2009, 2011; Kornell et al., 2009; Richland et al., 2009; Little and Bjork, 2011). Moreover, failure to retrieve a correct response to the pretest questions provides students with opportunities to identify and reflect on knowledge gaps (Rozenblit and Keil, 2002) and facilitates feedback-seeking behaviors during subsequent instruction (Shepard, 2002). These self-regulating behaviors are especially important, because students tend to overestimate their knowledge, especially of complex concepts, such as those in science, technology, engineering, and mathematics (STEM) courses (Rozenblit and Keil, 2002).

We hypothesized that LOs presented as pretest questions would enhance the learning of academic passages relative to LOs presented as statements. We also compared the two conditions to a comparable control condition in which participants’ study activity was reading facts (statements that provided the correct responses to matched pretest questions). We did not expect performance on the final test to differ between this control condition and the condition that presented LOs as statements.

Method

Participants and design. A new group of 159 undergraduate students (M age = 18.58, SD = 1.56) from McMaster University enrolled in Introductory Psychology participated in the experiment for course credit. Data from one participant were excluded due to a technical error that occurred during the study phase. Participants were randomly assigned to one of three between-subject conditions: LOs were presented before each passage (LO statements; N = 53; 12 males); MC pretest questions were presented before each passage (MC pretest; N = 53; 9 males); and LOs that also included the correct responses/key facts were presented before each passage (fact statements; N = 52; 11 males). Note that the LO statements condition is identical to the LOs-interpolated condition from experiment 1.

Materials.

The materials were similar to those in experiment 1, except that we added two new conditions. In the pretest condition, we converted the 15 LOs into 15 fact-based MC questions. For example, the LO “In the first passage, you will learn about where the mirror neurons are located” was converted to “Where are the mirror neurons located?” with four response options. Thus, in the MC pretest condition, each passage had three corresponding MC questions. In the fact statements condition, the 15 LO statements were modified to include the targeted facts. These facts were also the correct responses on the MC questions. In the above example, the fact statement read “In the first passage, you will learn that mirror neurons are located in the ventral premotor cortex.” See Table 1 for a sample of the materials created for the two new conditions.

Procedure.

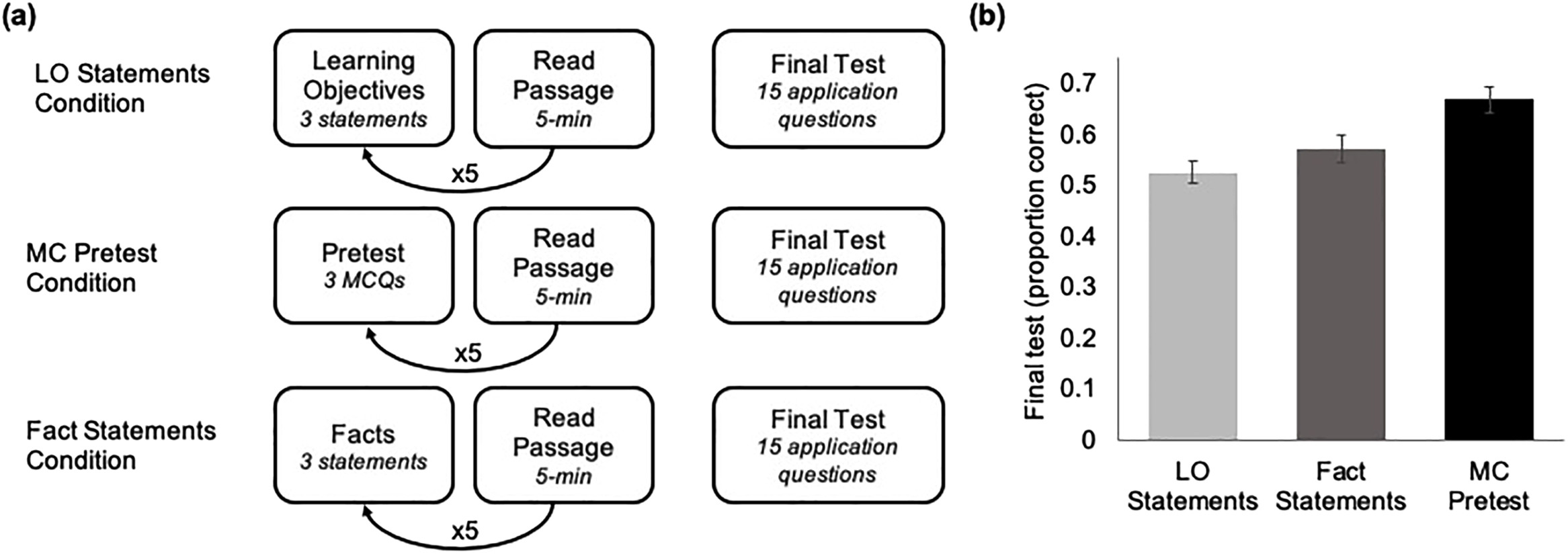

Participants were given the same instructions as in experiment 1 and were presented with the five, 5-minute passages. Before each passage, participants either read three LO statements, three fact statements, or responded to three MC pretest questions. All statements and MC questions were self-paced and presented one at a time. No feedback was provided on the MC responses. After the study phase, participants completed the same distractor task and final MC test as in experiment 1. The MC questions on the final test were different from the pretest MC questions, but tested the same concepts. See Figure 2a for an overview of the procedure.

FIGURE 2. General procedure (a) and results (b) of experiment 2, which examines performance on a final comprehension test as a function of a learning objectives (LOs) condition, a control (fact-only) condition, and a multiple-choice (MC) pretest condition. Error bars represent SEM.

Results and Discussion

Mean performance on the pretest questions was M = 0.35, SD = 0.14. We examined whether performance on the final test differed depending on the three conditions (see Figure 2b). An ANOVA showed that the effect of condition was significant, F(2, 155) = 8.84, MSE = 0.03, p < 0.001, ηp2 = 0.10. Pairwise comparisons, adjusted for multiple comparisons using the Bonferroni method, indicated that the average performance on the final test was significantly higher in the MC pretest condition (M = 0.67, SD = 0.18) compared with the LO statements condition (M = 0.53, SD = 0.15), t(104) = 4.34, p < 0.001, d = 0.84, and compared with the fact statements condition (M = 0.57, SD = 0.19), t(103) = 2.60, p = 0.019, d = 0.51. However, the mean performance on the final test was similar between the LO statements condition and the fact statements condition, t(103) = 1.35, p = 0.559.

We found that, even though most pretest questions were answered incorrectly (35% correct), responding to these questions resulted in significantly higher performance compared with reading traditional LOs statements or statements that included the correct responses. To our knowledge, this experiment is the first in the literature to directly compare LOs as statements with LOs as pretests. Moreover, the observed learning gain from pretests demonstrates that, unlike LO statements, the benefit of pretesting goes beyond directing attention to critical information. Support for this hypothesis comes from a series of experiments by Richland et al. (2009), in which participants were given a pretest to complete or were given additional studying time. In both conditions, testable content was emphasized (e.g., through italics and bold keywords), giving all students an equal opportunity for attention direction. However, recall of information was better for students who completed the pretest, suggesting that pretests not only direct attention to critical information, but also trigger other processes that promote deeper processing of subsequent information. For instance, given that pretests typically result in unsuccessful retrieval attempts, they could encourage feedback-seeking behaviors (e.g., Kulik and Kulik, 1988) and facilitate the encoding and integration of new information (Roediger and Butler, 2011; Carpenter and Yeung, 2017). Experiment 3 further explores this hypothesis.

EXPERIMENT 3

Experiment 2 demonstrated that pretests may be a more effective method to actively signal LOs than passively presenting factual statements. This finding raises two important issues regarding pretesting. First, students typically favor immediate feedback. While feedback is central to learning (e.g., Hattie and Timperley, 2007; Butler and Roediger 2008), most studies on pretesting do not provide feedback on responses (e.g., Geller et al., 2017; Carpenter et al., 2018). Second, instructors typically want to know whether pretest questions should be in the format of MC or SA. Research on pretesting has incorporated both formats, with a majority of the studies using cued recall and open-ended questions, with no direct comparison of which format produces greater learning (but see Little and Bjork, 2016, for evidence that MC pretest questions, when constructed with competitive alternatives, can be more effective than cued recall). Thus, the goal of experiment 3 was to examine the impact of two aspects of pretest questions on the subsequent learning of academic passages: 1) feedback (present or absent), and 2) question format (MC or SA).

Feedback on Pretest Responses

When students are uncertain about their responses to test questions, they may seek immediate feedback (Kulik and Kulik, 1988; Dunlosky and Rawson, 2015), which can subsequently enhance their learning (e.g., Finn and Metcalfe, 2010; Carpenter et al., 2012). However, some research suggests that generating errors can promote learning even when feedback on the responses is delayed (e.g., Kornell, 2014). In the case of pretests, feedback is not only slightly delayed, but also actively sought—students must discover the answers themselves in the lecture that immediately follows. This can promote deeper processing of the information in the lecture (e.g., Berlyne, 1962). Conversely, providing corrective feedback immediately following pretest questions may negate gains in learning by promoting a passive approach, in which students could potentially wait for and memorize the corrective feedback. This can promote shallow processing of information in the lecture (Kornell and Rhodes, 2013). Hausman and Rhodes (2018) provide initial support for this hypothesis. In their study, two groups completed an SA pretest and received corrective feedback on their responses, but only one group actually read the passages. Nevertheless, performance on a final comprehension test was comparable between groups, suggesting that participants simply memorized the explicit facts from the feedback, making the study of the passages unproductive. In the current experiment, we therefore hypothesized that withholding feedback on pretest responses would enhance the learning of academic passages relative to providing feedback.

Question Format of Pretests

Studies that show learning benefits of pretesting have used various formats of pretest questions, including MC, cued recall, free recall, and SA (e.g., Peeck, 1970; Bull and Dizney, 1973; Hartley and Davies, 1976; Rickards et al., 1976; Grimaldi and Karpicke, 2012; Little and Bjork, 2016; Carpenter and Toftness, 2017). It is currently unclear which test format is superior, and there are practical and theoretical reasons to explore this question. Practically, MC testing is used extensively in secondary and postsecondary classrooms and is increasingly incorporated into audience response systems (Mayer et al., 2009). MC tests are cost-effective, take less time to administer, and require minimal instructor time to grade compared with SA tests. Given that this testing format is commonplace in educational settings, it is important to explore whether MC and SA testing produce similar learning benefits when implemented as pretests.

In the testing effect (i.e., posttest) literature, which states that recalling information from memory enhances learning of that information (e.g., Roediger and Karpicke, 2006; Roediger and Butler, 2011), a majority of the studies use free recall or SA tests. While some findings suggest that both MC and SA questions can be equally effective at promoting learning (e.g., McDermott et al., 2014), others suggest that SA questions are more effective than MC questions, because the former promote retrieval processes, whereas the latter may rely on recognition processes, given that the correct response is present among the alternatives (e.g., Glover, 1989; Carpenter and DeLosh, 2006). Thus, attempts that are based on MC questions are aided by several retrieval cues, which can result in a shallower retrieval from memory episode and a reduced learning effect. This literature, however, is based on retrieval attempts made after the information is studied rather than before. In the case of pretests, students generally have no prior explicit knowledge on the content to be learned. Thus, they are unable to rely on and benefit from additional cues that are provided in the question to generate a response. However, Bjork et al. (2015) proposed that students may still study the alternatives of an MC pretest question, which may heighten attention more when information related to those alternatives is presented in the subsequent lesson. Indeed, Little and Bjork (2016) showed that MC pretests enhanced the learning of related, non-pretested information more than SA pretests. In the current experiment, we also investigated whether pretest format affects learning by directly comparing the effect of SA and MC pretests on the learning of academic passages.

Method

Participants and design. A new group of 193 undergraduate students (M age = 18.55, SD = 1.33) from McMaster University enrolled in Introductory Psychology participated in the experiment for course credit. Given a medium effect size of f = 0.25, the power analysis determined that at least 128 participants were required to detect an interaction with 80% power (α = 0.05). The experiment used a two (pretest question format: MC vs. SA) by two (feedback on pretest questions: present vs. absent) between-subjects design. Participants were randomly assigned to one of four conditions: MC pretest without feedback (N = 49; 16 males), MC pretest with feedback (N = 50; 11 males), SA pretest without feedback (N = 47; 8 males), and SA pretest with feedback (N = 47; 12 males).

Materials.

All four conditions in experiment 3 incorporated pretests. For two of the conditions, MC pretests were converted to SA pretests, in which the question stems remained identical, but instead of the four alternatives, participants entered their responses in a box on the computer screen. Note that, although a majority of the MC alternatives did appear in the subsequent passage, they were not the target or correct response to any of the other pretest or final test questions. Participants in two conditions also received corrective feedback on their pretest responses. The feedback stated the correct response to the question, which was identical for all participants regardless of whether participants answered MC or SA questions. For example, for the question “Where are mirror neurons located?” The feedback provided was “ventral premotor cortex.”

Procedure.

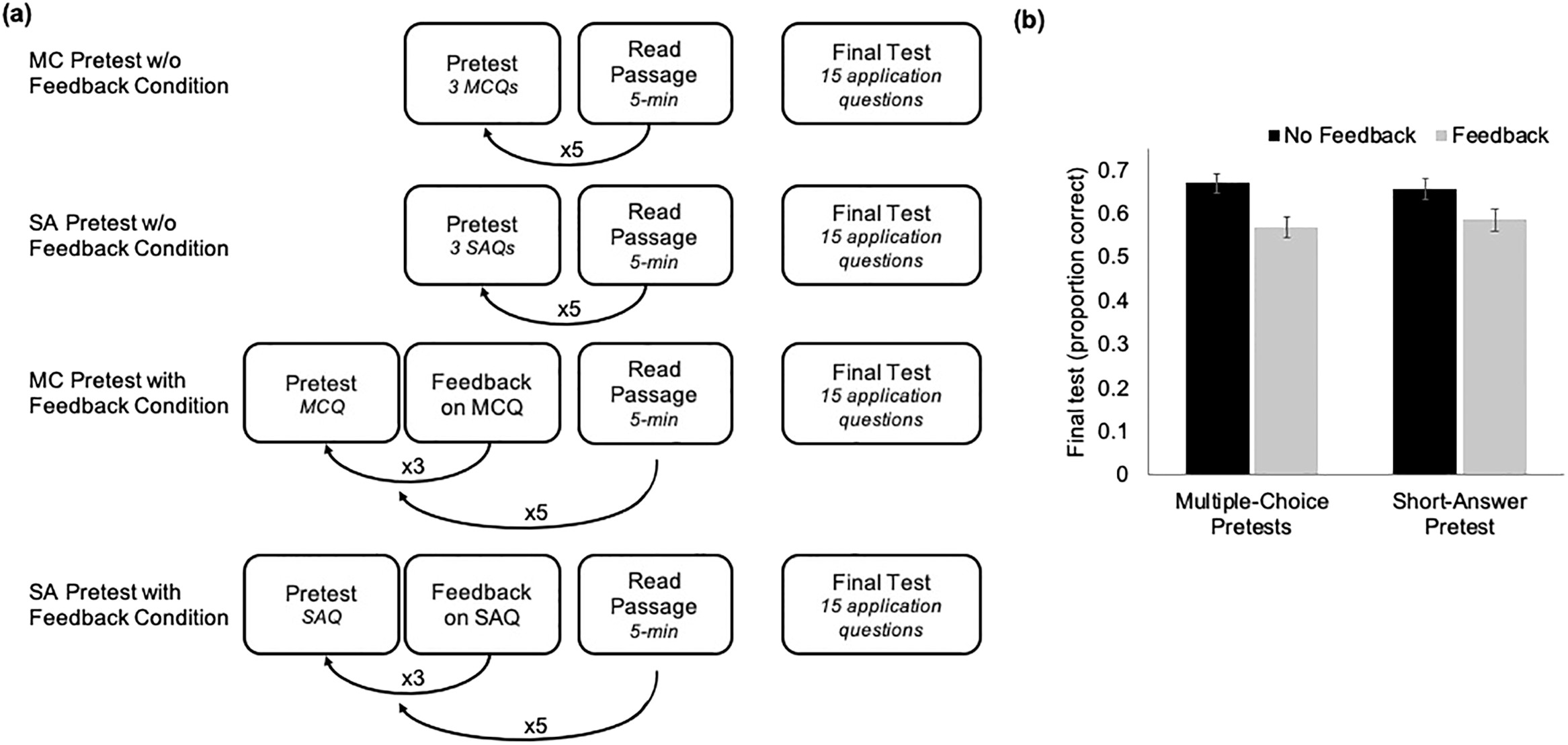

The procedure was similar to that of experiment 2. As before, participants were presented with five, 5-minute passages in the study phase. Before each passage, three pretest questions corresponding to the upcoming passage were presented. In the MC-pretest and SA-pretest conditions without feedback, participants selected or entered the response, respectively, and proceeded to the next pretest question. In the self-paced feedback conditions, once participants submitted their selected or entered response for a pretest question, the correct answer was presented on the next screen. This was done for all pretest questions, regardless of whether participants’ responses were correct or incorrect. In the final test phase, participants completed the same final test from experiments 1 and 2, which consisted of 15 application-based MC questions. See Figure 3a for an overview of the procedure.

FIGURE 3. General procedure (a) and results (b) of experiment 3, which examines performance on a final comprehension test as a function of question format of pretests: short-answer (SA) vs. multiple-choice (MC) pretests; and feedback on pretest responses: no feedback or feedback. Error bars represent SEM.

Results and Discussion

Performance on the Pretest Questions.

A two-way ANOVA yielded a nonsignificant main effect of feedback, F(1, 189) = 0.36, MSE = 0.01, p = 0.547, indicating that not providing feedback (M = 0.20, SD = 0.19) on pretest questions resulted in similar performance on the questions compared with providing feedback (M = 0.20, SD = 0.20). As expected, the main effect of question format was significant, F(1, 189) = 575.36, MSE = 0.01, p < 0.001, ηp2 = 0.75, indicating that the average performance on the pretest questions was higher when the questions were in the MC format (M = 0.37, SD = 0.13) compared with the SA format (M = 0.03, SD = 0.04). The interaction between question format and feedback was also nonsignificant, F(1, 189) = 0.36, p = 0.547.

Performance on the Final Test.

A two-way ANOVA yielded a nonsignificant main effect of question format, F(1, 189) = 0.00, MSE = 0.03, p = 0.958, suggesting that performance on the final test was similar regardless of whether participants took an MC pretest (M = 0.62, SD = 0.17) or SA pretest (M = 0.62, SD = 0.17). Importantly, however, the main effect of feedback was significant, F(1, 189) = 13.60, MSE = 0.03, p < 0.001, ηp2 = 0.07, suggesting that, as predicted, performance on the final test was significantly lower when participants received feedback on pretests (M = 0.58, SD = 0.17) compared with when they did not receive feedback (M = 0.66, SD = 0.15). The interaction between question format and feedback was nonsignificant, F(1, 189) = 0.43, MSE = 0.03, p = 0.515 (see Figure 3b). In the case of participants who took the MC pretest, those who received feedback (M = 0.57, SD = 0.17) performed significantly worse on the final test relative to participants who did not receive feedback (M = 0.67, SD = 0.15), t(97) = 3.17, p = 0.002, d = 0.64. Similarly, in the case of participants who took the SA pretest, those who received feedback (M = 0.59, SD = 0.18) performed significantly worse on the final test compared with those who did not receive feedback (M = 0.66, SD = 0.15), t(92) = 2.08, p = 0.04, d = 0.43.

There may be several reasons for this observed negative feedback effect on learning. For example, feedback may have reduced the processing depth of and the time spent on passages—participants may have become overreliant on feedback, and memorized the feedback, which could have resulted in an increased feeling of content fluency and decreased attention during the subsequent study session. While more research is needed to examine the possible explanations for when and why feedback on pretest responses is ineffective, the current results provide theoretical insight by demonstrating that that at least part of the pretesting benefit could come from feedback-seeking behaviors.

Does it matter if pretests are in MC or SA format? The present results suggest that both pretest formats similarly affected subsequent learning. While this finding is consistent with research conducted on posttests (McDermott et al., 2014), it partially deviates from the findings reported by Little and Bjork (2016) on pretests. They showed that MC pretests structured to include competitive, plausible alternatives (i.e., alternatives that are also testable facts and that are incorrect for one question, but correct for another) have the potential to enhance the learning of related, non-pretested information more than SA pretests, which do not include any options. Such a structured MC question encourages the processing of both the correct response and the plausible and testable alternatives that are conceptually related to the pretested information. On the other hand, an SA question, which does not include those alternatives, may direct attention to just the one correct response that is sought by the question. One likely reason for why we did not see an MC over an SA benefit in the current experiment could be because the MC alternatives in our materials are not considered competitive. Another reason is that we did not measure the learning on non-pretested, but related information. Specifically, only the concepts pretested were assessed for retention on the final test. Indeed, Little and Bjork showed similar learning benefits between MC pretests and SA pretests on the learning of pretested information, which our finding replicates. Future research should explore how pretests can be systematically structured to promote the learning of both pretested and related information.

GENERAL DISCUSSION

The current study examined how LOs can be optimized to promote learning of STEM reading passages. In experiment 1, we found that interpolating LO statements throughout the lesson improves learning (compared with not presenting LOs), particularly when explicit instructions on the importance of LOs are provided in order to direct students’ attention to LO content. In experiment 2, we found that converting LOs into pretest questions can augment the learning gains of traditional LO statements. This finding extends previous research on the pretesting effect (e.g., Grimaldi and Karpicke, 2012; Carpenter and Toftness, 2017). Attempting to answer pretest questions (which resulted in errors) was better than reading statements that included the correct responses to the pretest questions. The act of attempting to answer a question promotes a deeper level of processing.

Finally, we examined the conditions to optimize the benefits of pretesting. In experiment 3, we found that the question format (MC or SA) yielded similar learning gains. This is consistent with the literature on retrieval practice, which demonstrates that different question formats can be equally effective (McDermott et al., 2014). We also found that withholding feedback on pretests enhanced learning compared with providing feedback. Students are highly motivated to seek the correct responses to questions right after taking a test, especially when they make errors (Metcalfe, 2017). In the case of pretests, for which participants had a high rate of unsuccessful retrieval attempts, participants were likely to be more motivated to learn the answers to the pretest questions, which enhanced subsequent processing of the reading passages.

LIMITATIONS AND FUTURE DIRECTIONS

Based on the current findings, it may be tempting to prescribe that LOs should be converted into pretest questions or that teaching or study sessions should begin with pretests. However, such recommendations are premature. The current study is a first step to demonstrate the learning benefit of LOs when delivered as pretests and the learning benefit of pretests without feedback. While we have built-in replications across the three experiments, the findings are restricted to one topic area from a single course in laboratory contexts. Moreover, while instructors in biology and other STEM courses routinely include a wide range of LOs that vary across different levels of the Bloom’s taxonomy (Crowe et al., 2008), the LOs and pretests used in the current study exclusively targeted knowledge of basic facts. In addition to replicating the results across a diverse range of topics and different levels of learning in classroom settings, there are other outstanding questions that remain untested for practical implementation. For example, while LOs and pretests can focus attention on information related to the pre-studied content, they may take attention away from related information that is not pre-studied (Wager and Wager, 1985; Hannafin and Hughes, 1986). Carpenter and Toftness (2017) argued that this is particularly true for self-paced expository text; students tend to fixate on or selectively attend to parts of the text that are on the LOs or pretests. Given that the current study did not separately assess the learning of pre-studied versus related information, future research should investigate this critical gap.

In the current study, participants were instructed to pay close attention to pretest questions and were encouraged to actively seek out the correct answers to those questions. Future research may further investigate how attention and motivation may interact with pretesting of LOs. Indeed, there may be a cost associated with the feedback conditions (experiment 3) and the fact statements condition (experiment 2). Being provided with immediate feedback or studying the key facts may have encouraged shallow processing of subsequent passages. This could be due to a lack of motivation to read the passages carefully, as students may have incorrectly assessed that they have sufficiently acquired the key information needed to do well on the final test. Another unexplored question pertains to whether the type of feedback moderates the pretesting effect. Would providing right/wrong feedback rather than corrective feedback (with the correct response) change the way in which participants process the passages (e.g., Hausman and Rhodes, 2018)? Such feedback can be more informative for students who are unable to identify their errors or gaps in knowledge and thus use the feedback as a guide to focus on seeking information they do not know.

CONCLUDING REMARKS

Despite these limitations, the current research is significant in several ways. First, our data support the hypothesis that LOs, when delivered as pretests, can improve student learning. To our knowledge, this is the first study to systematically demonstrate this benefit. Second, the current study extends previous work showing that the pretest question format does not seem to matter (Little and Bjork, 2015, 2016). Third, we demonstrated that providing explicit feedback reduced the pretesting benefit compared with withholding feedback. This specific finding offers theoretical insight into why pretests facilitate learning—pretesting not only directs attention to key information, but could also encourage students to engage in feedback-seeking behaviors. Finally, the current study differs from other pretesting studies in two critical ways. First, almost all prior pretesting studies conducted in the laboratory and in classrooms used identical questions on both the pretests and the final tests. Second, almost all pretesting studies assess memory of basic facts. Our study used different questions between the pretests and the final test, demonstrating learning gains beyond those due to practice or similarity effects. Our study also used different levels of Bloom’s taxonomy, demonstrating that knowledge-level pretest questions can facilitate the comprehension and application of pretested information. LOs as pretests have the potential to be an easy to implement and cost-effective technique to promote student achievement in STEM classrooms. Understanding the scope and the limits of the observed pretesting benefit can reveal conditions under which learning can be optimized.

FOOTNOTES

1 We found that explicitly stating the importance of these pre-study activities and their benefits on learning encouraged participants to pay attention to them. In a separate study (M age = 18.86, SD = 3.45, 23 males) in which the materials and the procedure were identical to experiment 1 but did not include this instruction, performance on the final test did not differ between a control condition (M = 0.49, SD = 0.20, n = 53) and an LOs-interpolated condition (M = 0.52, SD = 0.20, n = 55), t(106) = −0.84, p = 0.403.

ACKNOWLEDGMENTS

We thank the members of McMaster EdCog Group for their valuable feedback on the article. This research was supported by a Social Sciences and Humanities Research Council Insight (no. 430-2017-00593) and by Athabasca University’s Academic Research Fund (no. 23560) awarded to F.S.