1) Psychosocial refers to how one positions or views themselves in the world, such as the identities one assumes, one's level of confidence, one's sense of well-being, and one's interactions or relationships with others or their environment.

2) In education research, instruments are any tools used to collect data. This can include tests, questionnaires, surveys, and interview or focus group protocols. Typically, instruments are intended to measure latent constructs. Latent constructs are abstract variables that are not directly visible, such as confidence in one's ability to do science, one's knowledge about the structure and function of DNA, or one's experimental design skills. In this paper, the construct of interest is "project ownership," and the paper outlines the development of a survey that can be used to measure project ownership.

3) In general, items are questions or statements on a test or questionnaire.Scaled items are questions or statements for which respondents select a number or point on a scale, such as a rating of 2 (agree) on a scale of 1-5 (strongly agree, agree, neither agree nor disagree, disagree, strongly disagree).

4) In social sciences, theories are logical explanations of social phenomena. Theories explain relationships between relevant variables, which can then be tested empirically.

5) Dimensionality refers to the components of the instrument or construct that comprise the whole. Take for example a chocolate chip cookie. Its "dimensions" are chips, dough, and some amount of baking. Without the dough, it would just be chips. Without the chips or baking, it would just be dough. All three dimensions are needed to comprise a chocolate chip cookie. Some scales include multiple dimensions if multiple ideas comprise the whole construct, as in this paper. Other scales are thought to be measuring a single, unidimensional construct, such as research self-efficacy or confidence in one's ability to do research. For more on dimensionality, see: http://www.socialresearchmethods.net/kb/scalgen.php

6) Reliability is the quality of the instrument - in other words, how reliably or consistently it can measure the intended construct. Reliability is judged in multiple ways, including consistency over time (same person responds the same way at multiple time points) or consistency across respondents (different people respond similarly, for example, when rating the quality of students' responses to an essay question). For more on reliability, see: http://www.socialresearchmethods.net/kb/reliable.php

7) Validity is the idea that the instrument is actually measuring what you think it is measuring. For this paper, validity refers to the idea that students' responses to the survey are indicators of students' actual sense of project ownership - in other words, that it is reasonable and fair to draw conclusions about their sense of ownership based on how they respond to the survey. It is typical to require multiple forms of validity evidence to argue that an instrument is a valid or trustworthy measure of a construct. It is also important to note that validity is "contextual" - meaning that what is valid in one context or for a particular purpose may not be valid in other contexts or for other purposes. Imagine for example you were developing a test to measure 2nd graders' reading abilities. This is unlikely to be a valid measure of reading abilities of high school students. For more on validity, see: http://www.socialresearchmethods.net/kb/constval.php

8) Coefficient alpha, also called Cronbach's alpha, is an indicator of "internal reliability" of an instrument or scale. It is a function of the number of items, the average covariance among pairs of items, and the variance of the total score. The idea is that responses to items purported to measure a single construct should all correlate at a high level. This statistic alone is not a compelling indicator of reliability since its value can be inflated by increasing the total number of items. Rules of thumb suggest a minimum acceptable alpha value of 0.7 or 0.8 (values range from 0.0 to 1.0). For more details, see: http://www.socialresearchmethods.net/kb/reltypes.php

9) Known groups validity refers to the idea that two groups can reasonably be expected to differ with respect to the construct being examined. Thus, differences in their responses can be used to confirm that an instrument is useful for discriminating between them. Once this is confirmed, the instrument itself can be used to discriminate among groups.

1) Sometimes because of our experience as instructors, we assume our experiences reflect well known and well accepted ideas. This is often not the case. In this Introduction, note how each claim or logical step in the core argument of the paper is supported by one or more citations. Because biology education research is interdisciplinary, it can be particularly helpful to search for articles with a cross-disciplinary tool such as Google Scholar https://scholar.google.com/.

2) Cognition relates to thinking or learning. Cognitive gains relate to improvements in one’s ability to think and reflect upon a particular lesson or topic.

3) Affect relates to emotions, attitudes, or dispositions. Affective gains relate to positive changes in enjoyment, satisfaction, beliefs, or values.

4) Behavior relates to observable or measurable actions. Behavioral outcomes are changes in actions by individuals as a result of an experience, such as the act of asking questions or choosing to take certain courses.

5) Note the careful language here. The authors do not state that students achieved particular outcomes, but rather that students reported achieving particular outcomes. This is an important distinction because students may be able to report reliably about some of their outcomes, such as gains in confidence, but not be able to reliably report on changes in their knowledge or skills. It is well established in cognitive science and psychology that novices cannot reliably gauge their knowledge and skills. Experts tend to be better able to gauge their knowledge and skills, but have a tendency to underestimate because they are aware of all they don't know. This phenomenon is called the "Dunning-Kruger effect." For more on student self-assessment, see: http://rer.sagepub.com/content/59/4/395.short

6) Likert scales are multipoint range of responses to questions or statements, which are commonly used in survey research. For example, on a scale of 1-5, these can be strongly agree, agree, neither agree nor disagree, disagree, and strongly disagree.

7) The term qualitative can mean many things in education research. In this instance, qualitative means that the study made use of qualitative (not quantitative) data in the form of interviews and qualitative methods, specifically assigning meaning to quotes in interviews (see coding below) and then grouping meanings into overarching thematic ideas. For more on qualitative research, see: http://www.socialresearchmethods.net/kb/qual.php

8) Construct validity refers to the overarching idea of validity - that the instrument is measuring what it is intended to measure. For more on construct validity, see: http://www.socialresearchmethods.net/kb/constval.php

9) The field of computational linguistics involves using computer science and computational techniques to analyze language and speech.

10) Content analysis refers to the process of chunking language, either written or oral, into units (e.g., quotes), assigning meaning to the units, and then making inferences about the meaning or patterns of meaning.

11) This entire paragraph aims to build an argument about what ownership is and why it is important.

12) In social sciences, constructs are abstractions that are not directly observable but can be inferred through observable phenomena, such as emotions, attitudes, knowledge, or skills. They can also be thought of as latent variables, which are variables that are inferred based on observable variables such as responses to test or survey items, observation protocols, or interview or focus group questions.

13) Coding is the process of assigning meaning to chunks of language, either written or oral. For more on qualitative coding and analysis, see: https://researchrundowns.com/qual/qualitative-coding-analysis/

14) When defining a construct, it is important to consider not only what counts as part of the construct (indicators) but also what doesn't count (counterindicators). Clear delineation of the boundaries of a construct will result in more accurate measurement and improved interpretation of measurement data.

15) This careful selection and description of the study sample, meaning who the participants are and what their range of experiences are, is important for helping to delineate what counts as ownership and what does not.

15b) The fact that the authors articulate how the sample was selected makes it easier for readers to evaluate the results.

16) These five categories can be considered dimensions of the construct of ownership - elements that make up the idea of ownership. Think of a chocolate chip cookie as the construct and chips, dough, and baking as the dimensions. All three are necessary for the construct of a chocolate chip cookie to exist. A chocolate chip cookie would not be a chocolate chip cookie without its dimensions: chips, dough, and baking. Similarly, if others wanted to use the ownership scale to measure student sense of ownership that results from a particular educational experience, all of the dimensions that comprise ownership would need to be considered.

17) Because so much of social science research is exploring complex phenomena that are not directly observable, researchers must articulate how they operationalize their constructs of interest. In other words, what is the researcher observing that is an indicator of the construct? For example, students' responses to a test question indicate some knowledge about evolution. The "assessment triangle" provides a useful way to think about this (http://www.nap.edu/read/10019/chapter/4?term=%22assessment+triangle%22#44), specifically that there is a construct (e.g., student cognition), an observation that is indicative of the construct (e.g., responses to test questions), and an interpretation of the observation that results in an inference about the construct (e.g., the way the student answered the question indicates something about how they think)

18) This paragraph places this new study in the context of a prior work, Hanauer et al. (2012).

19) The authors are presenting some of the problems with undergraduate research experiences, now commonly called CUREs (course-based research experiences, see https://curenet.cns.utexas.edu/), in terms of resources and student outcomes. In addition, they point out the need to develop an instrument that can measure project ownership in the context of course-based research experiences.

20) This description of the CURE survey, the URSSA, and the SURE surveys helps provide background information on the state of the field prior to this work stating the features of these instruments, limitations, and the need for other instruments.

21) The authors describe prior work that this report builds upon.

1) Standard methods sections for an education study include participants (including their personal characteristics and context or environment such their institution type, course context, etc.), the process (instruction, instrument development, etc.), data collection methods, and data analysis methods.

2) Minimum sample sizes should be chosen ahead of data collection in order to avoid "p-hacking," which is when researchers collect data until they reach a desired p value. A power analysis should be done for quantitative research or some other evidence-based rationale should be used to decide minimum sample sizes before data collection begins.

3) Knowing who the participants are helps readers determine how the instrument described here could be useful. For example, the authors tested the instrument with undergraduate students in the United States. Middle school students or practicing scientists might also develop a sense of ownership of their research projects, but the instrument would need to be tested and validated with these groups to make sure they interpret the items as they are intended and that the instrument is useful for discriminating among individuals in other populations.

4) The more different settings data can be collected from, the more likely the results can be generalized because the participants are more likely to reflect a broader sample. In addition, depending on the nature of the instrument, it may be more salient to measure the construct at the course or institution level rather than the student level. Because ownership is specific to a student's interaction with their educational experience, the authors were most interested in measuring at the student level.

5) By stating where the sample population is from, the authors acknowledge the limitations of their study.

6) One issue that is not addressed here is whether students are likely to respond in ways that are influenced by their institutions or courses. In other words, students who are enrolled in the same course are likely to respond more similarly than students in different courses. When this happens, it may be necessary to address this by using statistical models that account for the nesting of the data (i.e., students within courses, within institutions). If however students vary more in their responses within a course or institution than across courses or institutions, then nested models may not be necessary. Nesting is not possible with this dataset because there are not likely to be enough responses within each institution to model variance at the student and course or institution level.

7) It is helpful to provide information about how students were recruited or incentivized to participate in order to evaluate any potential for bias in the sample and also so others can replicate the methods.

8) It is important to include the number of participants who were invited to participate (if known), started to participate, and completed participation. This allows readers to judge how representative the sample might be of a larger population, and thus how generalizable the results might be.

9) For this study, only data from fully completed surveys were used. This helps avoid making assumptions about missing data, which could be missing at random or missing for a reason that results in bias in the results.

10) Manuscripts reporting on studies involving human subjects must include explicit assurance that the research reported was approved or determined to be exempt from review by a local Institutional Review Board (IRB).

11) Providing demographic characteristics for the sample helps readers determine how representative their responses are likely to be of the entire undergraduate student population in the U.S.

12) Pilot testing is an important step in instrument development. Typically, a small group of individuals representing the target population are asked to respond to the items, explain their responses, describe any points of confusion or ambiguity, and possibly suggest improvements to the wording. Their explanations are useful for ensuring the items are being interpreted the way they were intended and are as clear as possible.

13) These authors use a measurement development process espoused by Netemeyer and colleagues (2003), which draws at least in part on the widely used and highly respected framework for validity proposed by Samuel Messick. For more on validity theory, see explanations on YouTube from John Hathcoat at James Madison University. 5-minute introduction at: https://www.youtube.com/watch?v=rYc-coraFNk , and 40-minute lecture at: https://www.youtube.com/watch?v=_HS4lxsoR4Q

14) Clearly defining the construct of interest

15) Writing the items themselves. At this stage, it is common to seek input from experts in the construct(s) being assessed to make sure the items represent the construct. Representation of the construct includes having items that represent all dimensions of the construct as well as the full range of difficulty or agreeable-ness of the construct (e.g., what a respondent might agree to if they have the very minimum level of ownership and what a respondent would agree to only if they reach a full level of ownership, as well as points in between).

16) Rewording items based on responses to the study to eliminate confusions and ambiguities.

17) Collecting data from a larger sample of respondents and conducting analyses that reveal information about the quality of the instrument.

18) If the items are measuring a single construct, then a given respondent should respond similarly to all of the items.

19) Factor analysis is a statistical method that describes variability among many observed, correlated variables (e.g., responses to individual items on a survey) in terms of a lower number of unobserved variables or factors (e.g., latent variables or constructs). For example, in this study, responses to the items related to emotion are more correlated to one another than they are to the items related to ownership. So emotion and ownership are thought to be two factors.

20) Exploratory factor analysis, or EFA, makes no a priori assumptions about how many factors there are, or which items represent which factors.

21) Confirmatory factor analysis should be used when arguments can be made regarding how many factors are represented in an instrument and which items relate to which factors.

22) Cronbach's alpha is used as a measure of internal consistency, or how closely related a set of items are to one another. Ideally, separate items intended to measure the same construct should be correlated. Although it is widely used, there are also widespread concerns about the limitations of this metric as an indicator of reliability. For more on this, consult with a psychometrician or quantitative methodologist.

23) Internal consistency reflects the relationship between items purported to measure the same construct or dimension.

24) A known-groups comparative study is a comparison of results from groups that can reasonably be expected to differ. This type of evidence can be used to make an argument about the validity of a measure. Do we need a definition of? This was part of the abstract.

1) Validity, reliability, and dimensionality are the psychometric properties of a measure (i.e., how an instrument is used in a particular setting with a particular population).

2) For more on acceptable values for Cronbach's alpha and its use as a measure of reliability and internal consistency, see: http://shell.cas.usf.edu/~pspector/ORM/LanceOrm-06.pdf

3) This refers to a subsection of the Cronbach’s alpha procedure that allows the researcher to evaluate whether the alpha value changes if items are deleted. In other words, what would happen to the internal reliability of the scale if an item was deleted? These four items reduced the reliability of the scale up front and thus were taken out of the analysis.

4) This reflects two errors in the paper itself - 15 items are presented even though it says 18 here. The final survey contains 16 items; one is missing from this list. The total items tested are listed in Table 3 and the final version of the survey is presented in Table 4.

5) This is the correlation between scores on the specific item and the total score on the survey. Generally, responses to a given item should correlate with responses to the entire survey if they are measuring the same or related constructs.

6) This statistic is a measure of the proportion of variance among variables (in this case the items) that might be common variance (in this case, ownership or dimensions of it). A rule of thumb is that KMO values between 0.8 and 1 indicate sampling is adequate, and values less than 0.6 indicate sampling is not adequate although some authors set this cut-off at 0.5 as was done here.

7) Certain statistics assume normal distribution of one-dimensional or univariate data. Thus, data must be examined to determine whether this is a fair assumption before using these statistics. Normality can also be examined for multiple variables.

8) Maximum likelihood estimation is a method for estimating some unknown parameter (e.g., ownership) based on the distribution of observed values from a sample (i.e., participants’ responses to the items). The idea is to estimate a value for the unknown parameter that maximizes the probability of getting the data that were observed.

9) Rotation is a technique used to make the results of factor analysis easier to interpret. In essence, the item responses (variables) are graphed and the axes of the graph are rotated so that clustering between variables is more obvious. Oblimin rotation (versus varimax rotation) was chosen here because it allows the factors not to be orthogonal (i.e., correlated with one another). This makes sense because the items are likely to represent different factors or dimensions, which together represent ownership. Kaiser normalization involves normalizing the factor loadings, or correlations with factors, prior to rotating and then denormalizing them after rotation.

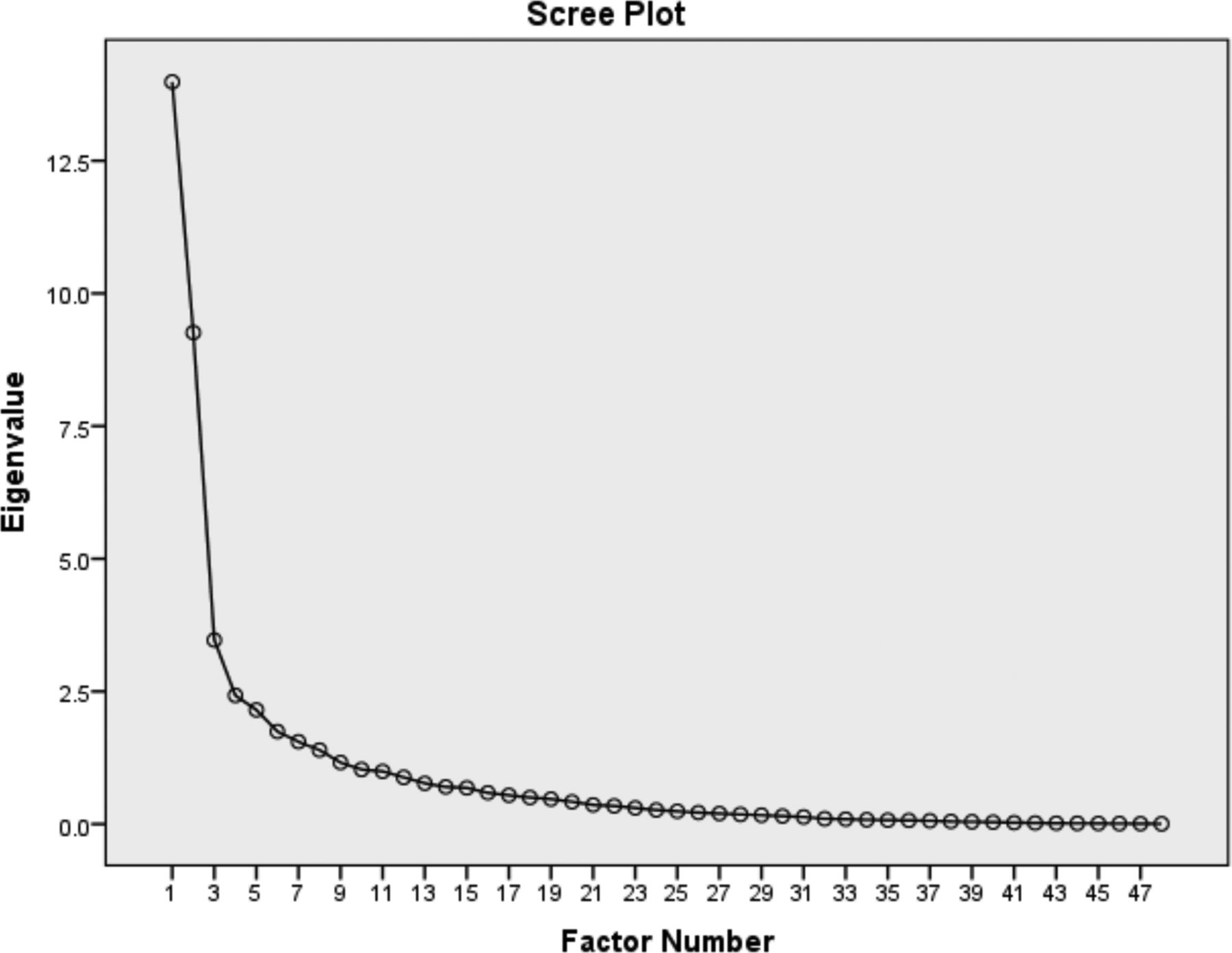

10) A scree plot is a visual depiction of the eigenvalues. By examining the slope of the line, you can see that most of the variance is explained by three factors. The slope doesn't change much with additional factors.

11) Eigenvalues are scalar values that result from linear transformation of a vector. They are helpful in this case because they reveal how many factors are likely to be explaining most of the variance in the data. If an eigenvalue is low (below 1 is the rule of thumb, although this is a low bar), then it is not explaining much of the variance in the data.

12) Loadings are the regression coefficients for each item with the factor. Different researchers advocate for different rules of thumb regarding what are acceptable thresholds for factor loadings. Loadings over 0.6 are generally considered good, while smaller loadings can be acceptable with sufficient sample. For more on this, see: http://imaging.mrc-cbu.cam.ac.uk/statswiki/FAQ/thresholds

13) The entire instrument is included as it was administered so that readers can evaluate it for themselves.

14) This "course type" factor would be better described as "degrees of agency", as it is described in the text.

15) Factor loadings can be both positive and negative. This item has a negative wording, so it is not surprising that it negatively correlates with the other items in this factor. The important feature of factor loadings is their absolute value. This is also important for thinking about the construct itself - negative values indicate something about what the construct is not, while positive values indicate something about what the construct is.

16) Factor loadings can be both positive and negative. This item has opposite wording to the items that have positive factor loadings, so it is not surprising that it negatively correlates with those items. Given these values, a better name for this construct might be "lack of agency."

17) Maximum likelihood extraction is a technique that is part of exploratory factor analysis (EFA). A contrasting technique would be principal components analysis (PCA), which uses principal axis factoring. This is fairly technical, but the main point is that EFA using this technique should be used (versus PCA) if there is a theoretical reason to connect the items, in other words the items represent some latent construct. In this case there is a good reason: the qualitative work that preceded this work. For more on the difference between EFA and PCA, see: http://www2.sas.com/proceedings/sugi30/203-30.pdf

18) Multiple sources of evidence are used to make judgments about whether items should be removed. It is important to remember that if items represent particular theoretical aspects of the construct of interest, it may be important to keep them and consider why the empirical evidence is not consistent with what was theorized.

19) The final, recommended version of the instrument is included, with the items that represent each dimension grouped together, to make it easier for readers to use. Grouping items related to a single factor together helps respondents stay focused on the particular idea or phenomenon and reduces their cognitive load.

20) Each point on the scale is labeled so that respondents have a better sense of what each point means. Responses to Likert scale items like this are considered ordinal variables rather than continuous variables because the difference between points on the scale may differ in a non-linear fashion.

21) This next component of the work strengthens the argument that the Project Ownership Survey is a valid measure of undergraduate life science students' sense of ownership of their lab learning experiences by comparing levels of ownership among students who would reasonably expect to differ in their ownership.

22) This is one piece of evidence that the students differed in the experience they were responding about.

23) This is a second piece of evidence that the students' experiences differed between the two course types.

24) One-way ANOVA is used to test whether there is a statistically significant difference in the means of two or more independent groups.

25) It is more typical to calculate ANOVAs with sum scores (sums of responses to all of the items), because the items are thought to represent a latent construct rather than to have stand-alone meaning. ANOVAs for each of the items was calculated here because this was the first description of the survey and this level of analysis can yield insight into how each item is behaving. Moving forward, this kind of calculation should be done with sum scores for each subscale (project ownership and emotion), since each represents a different dimension, or the entire scale.

26) P values of <0.05 are generally interpreted as statistically significant. Yet, many concerns have been raised about the limited meaning of the p value, the arbitrary cut-off of 0.05 to determine statistical significance, and the possibility of Type I errors when multiple comparisons are done (as was done here). The reporting of effects sizes (as was done here) and using corrections for multiple comparisons, such as the Bonferroni correction, can help to address these concerns.

27) Cohen's d is an indicator of the size of the standardized difference between two means. For more on interpreting Cohen's d, see: http://rpsychologist.com/d3/cohend/

1) The conclusions are not simply a restatement of the results. Rather, the results are briefly summarized and the authors focus on describing unanticipated findings, study limitations, and applications and implications of the work.

LSE authors have the liberty to assign appropriate names to each section of their paper. Using common section titles helps the reader navigate the work. In this case, the authors chose to call the section that interprets their result Conclusions instead of Discussion.

2) The key here is that multiple sources of evidence were used to demonstrate the POS is a valid measure of students' ownership of their lab learning experiences. This is consistent with current thinking about validity as a holistic idea as described by Samuel Messick. For more on validity theory, see explanations on YouTube from John Hathcoat at James Madison University. 5-minute introduction at: https://www.youtube.com/watch?v=rYc-coraFNk, and 40-minute lecture at: https://www.youtube.com/watch?v=_HS4lxsoR4Q

3) Cronbach's alpha and results of the factor analysis are the psychometric properties described here.

4) This is an important point because the authors are not "throwing out" the idea that student agency is important and perhaps unique to research courses. Rather, they will likely go back to the drawing board in designing items to better capture the idea of agency or what unique agency students experience in research courses versus traditional lab courses.

5) All studies have limitations. The authors are sometimes (although not always) best positioned to see the limitations of their study. Pointing them out not only makes it clear they have thought through their results and are not overstating their claims. Some papers even have a separate Limitations section.

6) This is a good recommendation given features of the study design and the evidence presented here.

7) The psychosocial aspects of undergraduate research experiences refer to how students view or position themselves as while doing research and as a result of doing research. Students' psychosocial development, such as shifts in their identities as scientists or their confidence in their ability to do science research, is important to measure because it is likely to influence the choices students make in the future, such as whether they choose to engage in other research experiences, complete a science major, or pursue a graduate degree or a science-research related career.

8) It is reasonable to expect that students will learn to think in different ways as a result of doing research compared to other types of learning experiences. However, these outcomes are important to measure because research experiences are likely to vary widely and we cannot understand how to design effective research experiences without collecting and analyzing data to determine what students learn or how they develop.